StyleGAN Salon: Multi-View Latent Optimization for Pose-Invariant Hairstyle Transfer

VISTEC

VISTEC

Rayong, Thailand

Phranakhon Rajabhat University

Phranakhon Rajabhat University

Bangkok, Thailand

CVPR 2023

VISTEC

VISTEC

Rayong, Thailand

Phranakhon Rajabhat University

Phranakhon Rajabhat University

Bangkok, Thailand

CVPR 2023

StyleGAN Salon Our method can transfer the hairstyle from any reference hair image in the top row to Tom Holland, in the second row.

Our paper seeks to transfer the hairstyle of a reference image to an input photo for virtual hair try-on. We target a variety of challenges scenarios, such as transforming a long hairstyle with bangs to a pixie cut, which requires removing the existing hair and inferring how the forehead would look, or transferring partially visible hair from a hat-wearing person in a different pose. Past solutions leverage StyleGAN for hallucinating any missing parts and producing a seamless face-hair composite through so-called GAN inversion or projection. However, there remains a challenge in controlling the hallucinations to accurately transfer hairstyle and preserve the face shape and identity of the input. To overcome this, we propose a multi-view optimization framework that uses "two different views" of reference composites to semantically guide occluded or ambiguous regions. Our optimization shares information between two poses, which allows us to produce high fidelity and realistic results from incomplete references. Our framework produces high-quality results and outperforms prior work in a user study that consists of significantly more challenging hair transfer scenarios than previously studied.

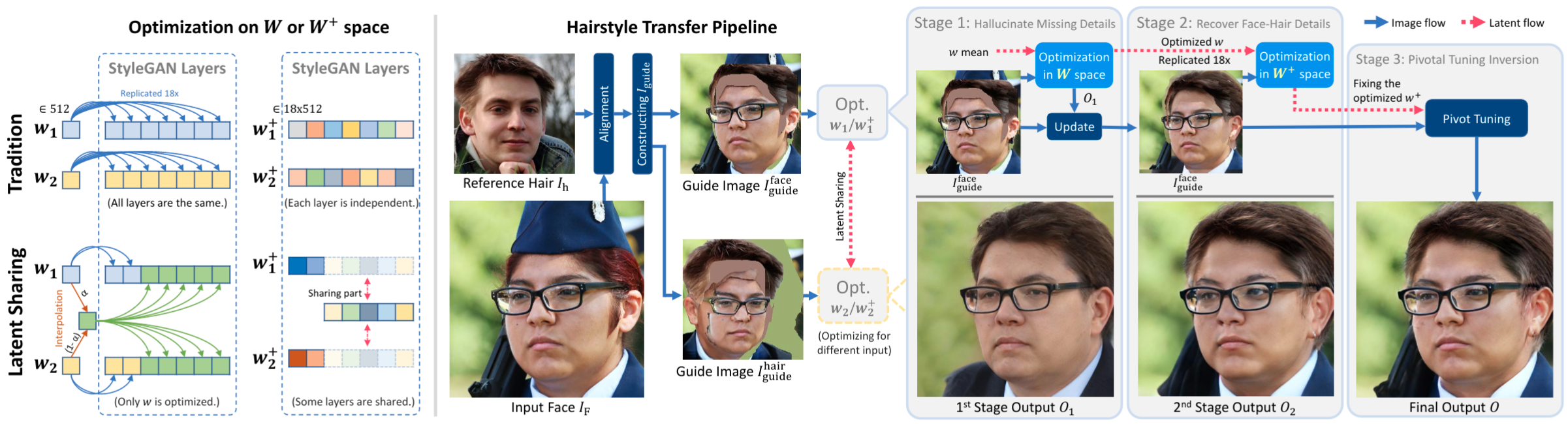

We first align the input face If and reference hair Ih and use them to construct guide images Iguide, in two different viewpoints, which specifies the target appearance for each output region.

Real images

FFHQ

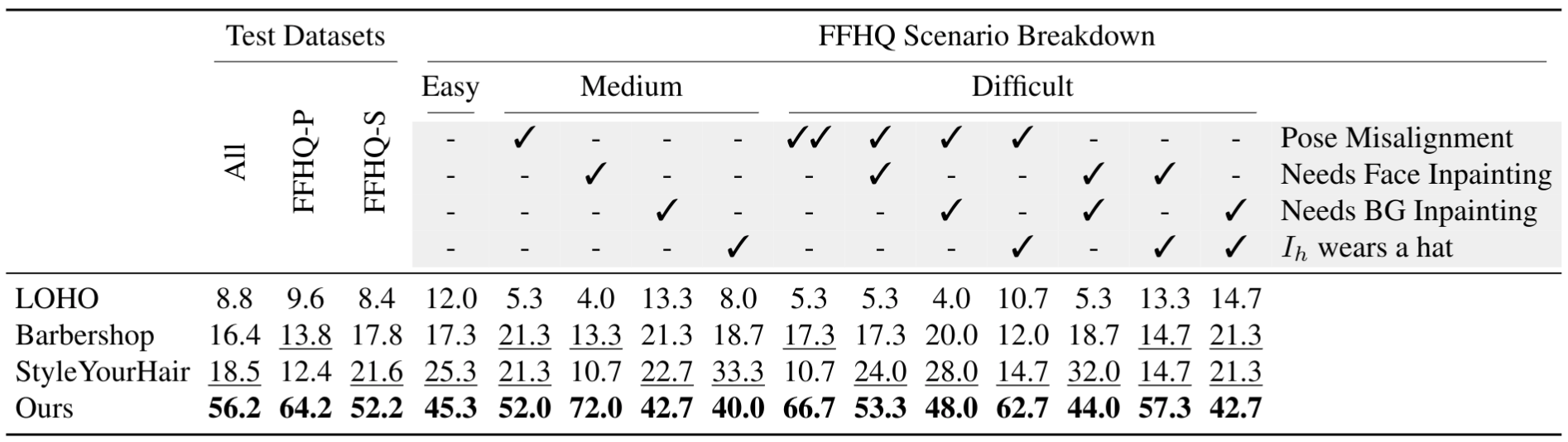

User study results on hairstyle transfer (percentage of user preferring each method). Our method outperforms state-of-the-art hairstyle transfer methods on FFHQ datasets in all challenging scenarios. A total of 450 pairs are used in this study, 150 pairs in FFHQ-P and 300 in FFHQ-S. For each pair, we asked 3 unique participants to select the best result for hairstyle transfer.

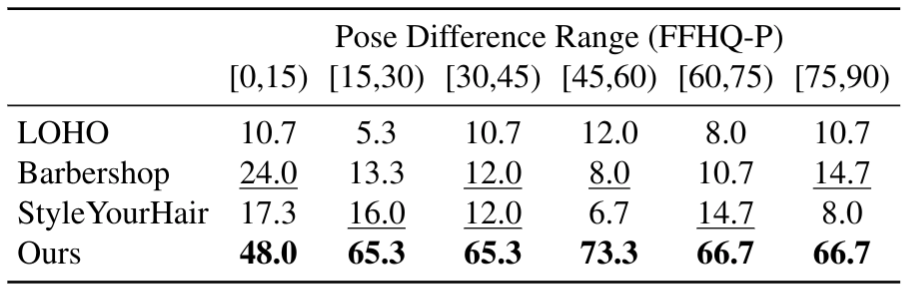

User study on pose-invariant hairstyle transfer. Our method outperforms others on all pose difference ranges.

Abstract Our work aims to transfer the hairstyle of a reference image to an input video for virtual hair try-on. We are the first to address temporal consistency across video frames in hairstyle transfer, which allows users to experiment with different hairstyles in motion. Our method is built upon StyleGANSalon, the SOTA hairstyle transfer, and STIT, a StyleGAN-based video editor that provides temporal coherency. In our preliminary results, we observed a significant improvement over previous approaches, as supported by a user study. Human evaluators consistently ranked our work with the highest scores compared to the baseline methods.

BibTex

@inproceedings{Khwanmuang2023StyleGANSalon,

author = {Khwanmuang, Sasikarn and Phongthawee, Pakkapon and Sangkloy, Patsorn and Suwajanakorn, Supasorn},

title = {StyleGAN Salon: Multi-View Latent Optimization for Pose-Invariant Hairstyle Transfer},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023},

}

Gage Skidmore. Tom holland speaking at the 2016 san diego comic-con international in san diego, california. @ONLINE. https://commons.wikimedia.org/wiki/File:Tom_Holland_by_Gage_Skidmore.jpg/, 2016.

Gage Skidmore. Tom holland speaking at the 2016 san diego comic-con international in san diego, california. @ONLINE. https://commons.wikimedia.org/wiki/File:Tom_Holland_by_Gage_Skidmore.jpg/, 2016.

Ironhammer america. File:elizabeth-olsen-1632123202.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Elizabeth-olsen-1632123202.jpg/, 2022.

Ironhammer america. File:elizabeth-olsen-1632123202.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Elizabeth-olsen-1632123202.jpg/, 2022.

Gordon Correll. File:julianne moore (15011443428) (2).jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Julianne_Moore_(15011443428)_(2).jpg/, 2014.

Gordon Correll. File:julianne moore (15011443428) (2).jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Julianne_Moore_(15011443428)_(2).jpg/, 2014.

nicolas genin. File:julianne moore 2009 venice film festival.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Julianne_Moore_2009_Venice_Film_Festival.jpg/, 2009

nicolas genin. File:julianne moore 2009 venice film festival.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Julianne_Moore_2009_Venice_Film_Festival.jpg/, 2009

David Shankbone. File:dwayne johnson at the 2009 tribeca film festival.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Dwayne_Johnson_at_the_2009_Tribeca_Film_Festival.jpg/, 2009.

David Shankbone. File:dwayne johnson at the 2009 tribeca film festival.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Dwayne_Johnson_at_the_2009_Tribeca_Film_Festival.jpg/, 2009.

Gage Skidmore. File:elizabeth olsen by gage skidmore2.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Elizabeth_Olsen_by_Gage_Skidmore_2.jpg/, 2019.

Gage Skidmore. File:elizabeth olsen by gage skidmore2.jpg @ONLINE. https://commons.wikimedia.org/wiki/File:Elizabeth_Olsen_by_Gage_Skidmore_2.jpg/, 2019.

tenasia10. File:blackpink lisa gmp 240622.png trends @ONLINE. https://commons.wikimedia.org/wiki/File:Blackpink_Lisa_GMP_240622.png/, 2022.

tenasia10. File:blackpink lisa gmp 240622.png trends @ONLINE. https://commons.wikimedia.org/wiki/File:Blackpink_Lisa_GMP_240622.png/, 2022.

GABI THORNE. Jennifer aniston reacts to tiktok’s best (and most absurd) trends @ONLINE. https://www.allure.com/story/jennifer-aniston-reacts-to-tiktok-trends/, 2022.

GABI THORNE. Jennifer aniston reacts to tiktok’s best (and most absurd) trends @ONLINE. https://www.allure.com/story/jennifer-aniston-reacts-to-tiktok-trends/, 2022.

Albert Truuväärt. File:re kaja kallas (cropped).jpg @ONLINE. https://commons.wikimedia.org/wiki/File:RE_Kaja_Kallas_(cropped).jpg/, 2011.

Albert Truuväärt. File:re kaja kallas (cropped).jpg @ONLINE. https://commons.wikimedia.org/wiki/File:RE_Kaja_Kallas_(cropped).jpg/, 2011.